Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

another alpha leak. same technique we used for KernelBench. it’s a universal approach in its simplest form.

examples are all you need: get one good result or one single improvement, add to context, get more good results with improved context, add those to context, ad inf…

22.7. klo 11.19

Recently Openai, Goolge reached IMO Gold Medal's with their new experimental models.

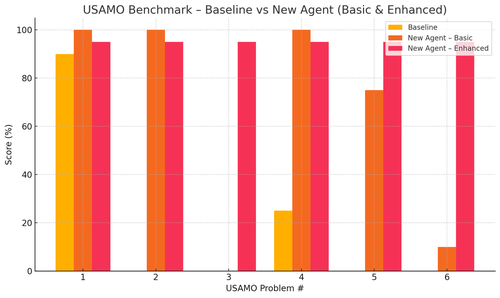

But our team reached the same level with just o4-mini-high and our agent systems. And now we are opensourcing it.

Especially we got insane improvements with the USAMO benchmarks. The base line was almost 0 but our agent got average 90%.

Also we could prove theoreticaly the recent arxiv papers just giving the key research Idea.

there is no point training small models really… you are better finding the ideal program to feed to the biggest machines.

if you want the best output distribution, you need to find the ideal input distribution, like practice.

you can get there from nothing as long as you have a way to rank your outputs.

these guys’ technique seems overengineered tho, can likely be much simpler.

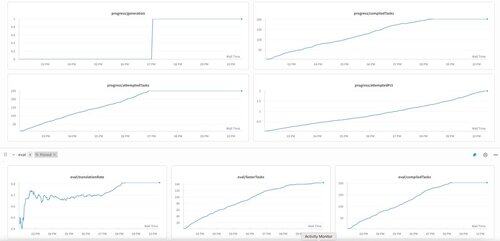

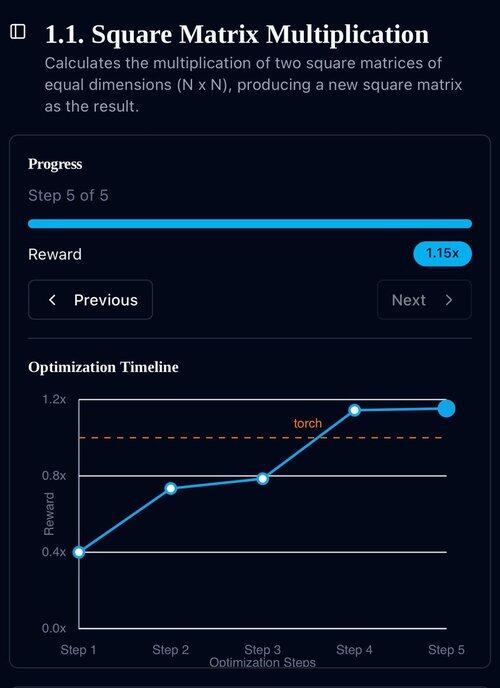

KernelBench’s first generation on o3-mini. we just called this “self-improvement.”

30.4.2025

we have an unverified SOTA result on KernelBench with o3-mini and an evolutionary examples tape: 208/250 claimed speedups, including 3 for Level 4 (prev untouched).

would be grateful for any help reviewing the optimized KernelBench kernels at .

thank you to @anneouyang and Stanford’s @ScalingIntelLab for agreeing to review them.

3,17K

Johtavat

Rankkaus

Suosikit