Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

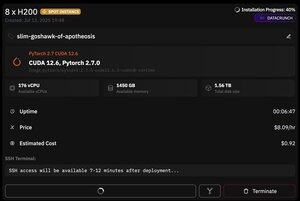

but what's great about @PrimeIntellect is the availability of spot instances -- today I got a node with 8xH200 for just $8/hr!

I'll show how I quickly set up moonshotai/Kimi-K2-Instruct inference using vllm

1. After gaining SSH access to your pod (wait about 10 minutes), create your project and install the required libraries:

apt update && apt install htop tmux

uv init

uv venv -p 3.12

source .venv/bin/activate

export UV_TORCH_BACKEND=auto

export HF_HUB_ENABLE_HF_TRANSFER="1"

uv pip install vllm blobfile datasets huggingface_hub hf_transfer

After that, open a tmux session

2. To start hosting vllm you simply use vllm serve:

vllm serve moonshotai/Kimi-K2-Instruct --trust-remote-code --dtype bfloat16 --max-model-len 12000 --max-num-seqs 8 --quantization="fp8" --tensor_parallel_size 8

Actual checkpoint download is tough, since even with hf_transfer it will take 1hr (anyone knows a quicker solution or mounting downloaded checkpoint somehow?)

3. Then in a new tmux pane install cloudflare quick tunnel and start it

install cloudflared

cloudflared tunnel --url

That's basically it! An OpenAI-compatible server will be available at the URL provided by Cloudflare, in my case it's and I just use my simple wrapper over openai client to generate lots of synthetic data through it

38,67K

Johtavat

Rankkaus

Suosikit