Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

The PUMP public sale is over, originally I was also a large amount in Bybit, only 1/2 on the chain, and in the end, only the chain was successful, fortunately there was no hedging in advance...

Recently, there has been a lot of discussion in the AI community about VLA (Vision-Language-Action)

In particular, I went to research whether there was anyone doing VLA-related projects on the chain, and I saw this CodecFlow@Codecopenflow project and bought a little.

== What is CodecFlow doing ==

A brief introduction to VLA, a model architecture that allows AI to not just "speak", but "do".

Traditional LLMs (like GPT) can only understand language and give suggestions, but it can't do hands-on, click on screens, or grab objects.

The VLA model means that it integrates three capabilities:

1. Vision: Understand images, screenshots, camera inputs, or sensor data

2. Language: Understand human natural language instructions

3. Action: Generate executable commands, such as mouse clicks, keyboard input, and control of the robotic arm

CodecFlow is doing the VLA on the chain, and all the processes can also be uploaded to the chain, which can be audited, verified, and settled.

In simple terms, it's the infrastructure of an "AI bot".

== Why am I paying special attention to this item? ==

I found out that their developers are core contributors to LeRobot, the hottest open source project in the VLA space!

LeRobot is the top base for building VLA models in the open source world, including lightweight VLAs that can run on laptops such as SmolVLA.

It means that this team really understands VlA architecture and Robot.

I see that they are also continuing to build, and the price of the currency is also rising steadily, I am very optimistic about the VLA track, and from the overall trend, VLA and robots are indeed the future in the market.

• Web2 giants (Google, Meta, Tesla) are now fully committed to VLA & bot training;

• Web3 projects are scarce in terms of VLA applications that can perform tasks

• VLA has the opportunity to play a huge role in scenarios such as DePIN, Web Automation, and on-chain AI agent execution.

CA:69LjZUUzxj3Cb3Fxeo1X4QpYEQTboApkhXTysPpbpump

Always DYOR。

26.6.2025

What is a $CODEC Operator?

It’s where Vision-Language-Action models finally make AI useful for real work.

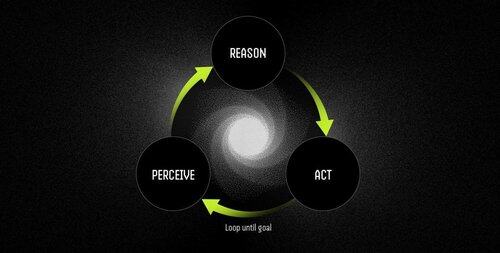

An Operator is an autonomous software agent powered by VLA models that performs tasks through a continuous perceive-reason-act cycle.

LLMs can think and talk brilliantly, but they can’t point, click, or grab anything. They’re pure reasoning engines with zero grounding in the physical world.

VLAs combine visual perception, language understanding, and structured action output in a single forward pass. While an LLM describes what should happen, a VLA model actually makes it happen by emitting coordinates, control signals, and executable commands.

The Operator workflow is:

- Perception: captures screenshots, camera feeds, or sensor data.

- Reasoning: processes observations alongside natural-language instructions using the VLA model.

- Action: executes decisions through UI interactions or hardware control—all in one continuous loop.

Examples: LLM vs. Operator Powered by VLA Model

Scheduling a Meeting

LLM: Provides a detailed explanation of calendar management, outlining steps to schedule a meeting.

Operator with VLA Model:

- Captures the user's desktop.

- Identifies the calendar application (e.g., Outlook, Google Calendar).

- Navigates to Thursday, creates a meeting at 2 PM, and adds attendees.

- Adapts automatically to user interface changes.

Robotics: Sorting Objects

LLM: Generates precise written instructions for sorting objects, such as identifying and organizing red components.

Operator with VLA Model:

- Observes the workspace in real time.

- Identifies red components among mixed objects.

- Plans collision-free trajectories for a robotic arm.

- Executes pick-and-place operations, dynamically adjusting to new positions and orientations.

VLA models finally bridge the gap between AI that can reason about the world and AI that can actually change it. They’re what transform automation from fragile rule-following into adaptive problem-solving—intelligent workers.

"Traditional scripts break when the environment changes, but Operators use visual understanding to adapt in real time, handling exceptions instead of crashing on them."

10,78K

Johtavat

Rankkaus

Suosikit