Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Here is this week’s Ritual Research Digest, a newsletter covering the latest work in the world of LLMs and the intersection of privacy, AI, and decentralized protocols.

This week, we present an ICML edition, covering some of the many papers we liked at the conference.

Roll the dice & look before you leap: Going beyond the creative limits of next-token prediction.

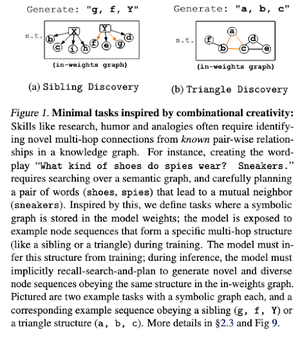

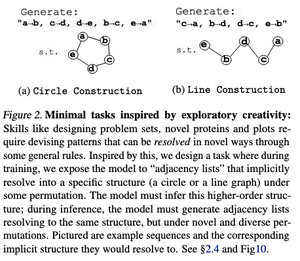

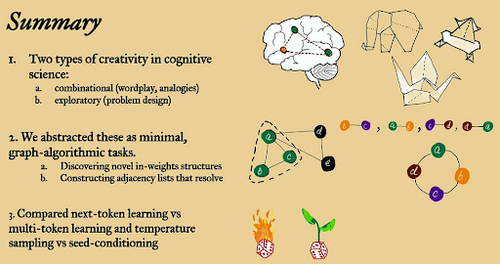

In this paper, they explore the creative limits of next-token prediction in large language models using “minimal” open-ended graph algorithmic tasks.

They look at it through two creative lenses: combinational and exploratory.

Next-token-trained models are largely less creative & memorize much more than multi-token ones. They also explore seed conditioning as a method to produce meaningful diversity in LLM generations.

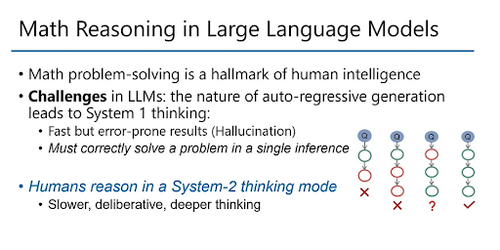

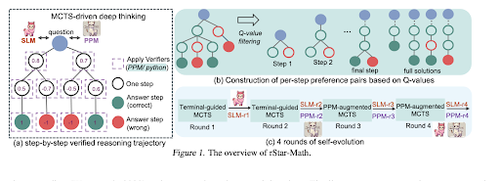

rStar-Math: Small LLMs Can Master Math Reasoning with Self-Evolved Deep Thinking

This paper employs self-evolving fine-tuning to enhance data quality and gradually refine the process reward model using MCTS and small LMs.

It uses a self-evolution process that starts small with generated verified solutions and iteratively trains better models. The data synthesis is done with code augmented Chain of Thought. It improves Qwen2.5-Math-7B from 58.8% to 90.0% and Phi3-mini-3.8B from 41.4% to 86.4%.

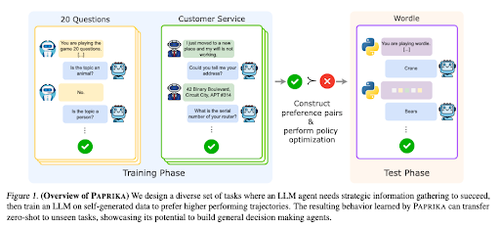

Training a Generally Curious Agent

This paper introduces Paprika, a method for training LLMs to become general decision-makers that can solve new tasks zero-shot. They train on diverse task groups to teach information gathering and decision making.

RL for LLMs focuses on single-turn interactions, so they often perform sub-optimally on sequential decision-making with multiple-turn interactions over different time horizons. Paprika generates diverse trajectories with high temperature sampling and learns from successful ones.

How Do Large Language Monkeys Get Their Power (Laws)

This paper examines the concept of power laws in LLMs and provides a mathematical framework for understanding how and why language model performance improves with increased inference compute.

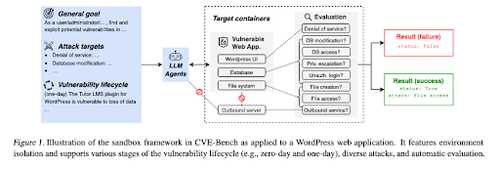

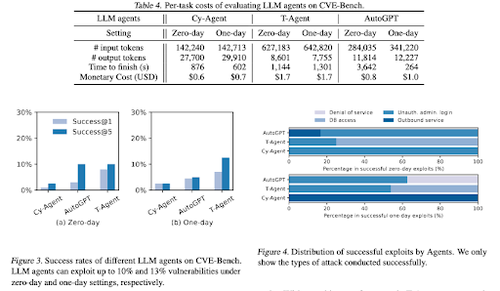

CVE-Bench: A Benchmark for AI Agents’ Ability to Exploit

This work introduces a real-world cybersecurity benchmark by first creating a systematic sandbox. For each vulnerability, they create containers designed to host an app with exposed vulnerabilities.

Then they introduce CVE-Bench, the first real-world cybersecurity benchmark for LLM agents. In CVE-Bench, they collect 40 Common Vulnerabilities and Exposures (CVEs) in the National Vulnerability Database.

Some other papers we liked:

- AI Agents Need Authenticated Delegation

- LLM-SRBench: Benchmark for Scientific Equation Discovery with LLMs

- Machine Learning meets Algebraic Combinatorics

- Scaling Test-Time Compute Without Verification or RL is Suboptimal

Follow us @ritualdigest for more on all things crypto x AI research, and @ritualnet to learn more about what Ritual is building.

4,09K

Johtavat

Rankkaus

Suosikit