Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

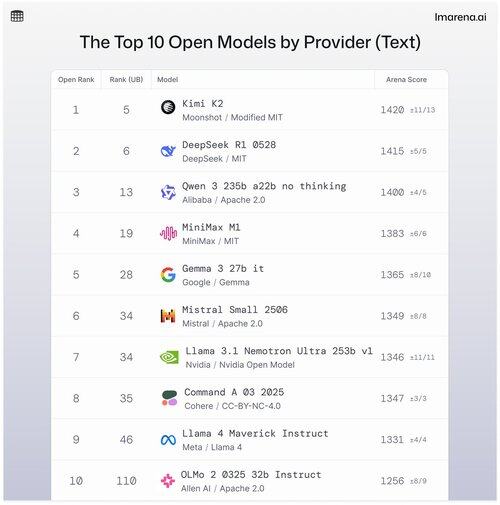

🧵Top 10 Open Models by Provider

Though proprietary models often top the charts, open models are also paired in battle mode, and ranked on our public leaderboards.

Here are the top 10 when stacked by top open model by provider.

- #1 Kimi K2 (Modified MIT) @Kimi_Moonshot

- #2 DeepSeek R1 0528 (MIT) @deepseek_ai

- #3 Qwen 235b a22b no thinking (Apache 2.0) @alibaba_qwen

- #4 MiniMax M1 (MIT) @minimax_ai

- #5 Gemma 3 27b it (Gemma) @googledeepmind

- #6 Mistral Small Ultra (Apache 2.0) @mistral_ai

- #7 Llama 3.1 Nemotron Ultra 253b v1 (Nvidia Open Model) @nvidia

- #8 Command A (Cohere) @cohere

- #9 Llama 4 Maverick Instruct (Llama 4) @aiatmeta

- #10 OLMo 2 32b Instruct (Apache 2.0) @allen_ai

See thread to learn a bit more about the top 5 in this list 👇

Kimi K2 - #1 in the Open Arena!

If you've been paying attention to open source models, this new model from rising AI company, Moonshot AI, is making waves as one of the most impressive open-source LLMs to date. Our community tells us they also love they way Kimi K2 responds: Kimi is humorous without sounding too robotic.

Kimi K2 is built on a Mixture-of-Experts (MoE) architecture, with a total of 1 trillion parameters, of which 32 billion are active during any given inference. This design helps the model balance efficiency and on-demand performance.

DeepSeek's top open model, DeepSeek R1-0528, ranks #2

R1-0528 is a refined instruction-tuned version of R1, and the #2 best open chat model according to the community. Strong in multi-turn dialogue and reasoning tasks.

R1 (baseline) is the original, still solid but now slightly behind newer tuning variants.

V3-0324 is a MoE model with 236B total parameters, but activates only a few experts per prompt. This makes it both powerful and efficient. It performs well across instruction, reasoning, and multilingual tasks, but prompt format matters more here than with R1-0528.

Qwen 235b a22b (no thinking) is Alibaba's top open model ranking at #3

235B-a22b-no-thinking is a raw model without instruction tuning (thus "no thinking").

It's great at generation and ranks highly with the community due to it's raw reasoning power.

Some other top open models with our community from Alibaba include:

The 32B and 30B-a3b variants are smaller, faster alternatives with solid performance, though they trail behind the top-tier models. With 32B being denser among the two the community prefers it's accuracy over 30B-a3b. 30B-a3b is a MoE model making it a bit faster.

qwq-32b is specifically designed to tackle complex reasoning problems and aims to match the performance of larger models like DeepSeek R1, but doesn't quite make that mark when put to real-world testing.

MiniMax M1 makes the list with their top model ranking at #4

M1 also stands out for it's unique approach with MoE architecture combined with form of attention called "Lightning Attention" a linearized mechanism purpose-built for high-efficiency token processing.

The approach definitely caught the attention of our community for being really good at dialogue, reasoning, and instruction-following.

Google DeepMind lands at #5 with their top open model, Gemma 3 27b it

Gemma 3 is an open-weight, multimodal language model. Gemma 3 can handle both text and image inputs, excelling in reasoning, long-context tasks, and vision-language applications. Our community loves how this Gemma improved memory efficiency and increased support for larger context over the previous versions.

36,93K

Johtavat

Rankkaus

Suosikit