Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Why pre-training and post-training teams need to get along

18.7. klo 06.21

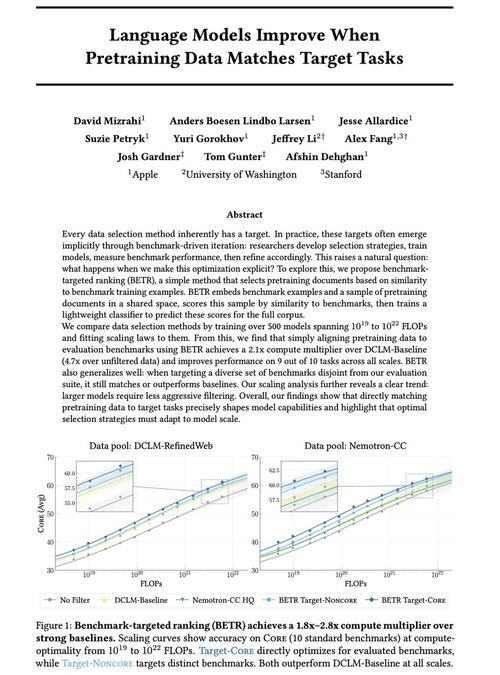

Excited to share our new work: “Language Models Improve When Pretraining Data Matches Target Tasks”

Yes, it sounds obvious (and it is!), but typically this only happens implicitly and indirectly: intuitively select data → benchmark → refine → repeat.

We wondered: what happens if we explicitly match pretraining data to benchmarks? The result is a dead simple approach that yields 2x+ compute multipliers over strong baselines and gives us a principled way to study how benchmark choices shape (and constrain!) model capabilities.

Bonus: extensive scaling laws from training 500+ models that reveal how optimal data selection evolves as models scale.

🧵 (1/14)

2,68K

Johtavat

Rankkaus

Suosikit