Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Shane Gu

Gemini - RL, CoT, multilinguality. Senior Staff RS @GoogleDeepMind MTV. 🇯🇵-born 🇨🇳🇨🇦. ex: @OpenAI (JP: @shanegJP)

Asians: we will fix our own mess

Patrick Shen18.7. klo 03.03

At their launch Cluely claimed it would kill 9 industries.

We're here to kill just one: cheating.

Meet Truely — the open-source tool that flags AI-assisted interviews in real time. Works with Zoom, Meets, Teams, and more.

The future of online interviews is here.

2,02K

To fight Asians, you need Asians

Patrick Shen18.7. klo 03.03

At their launch Cluely claimed it would kill 9 industries.

We're here to kill just one: cheating.

Meet Truely — the open-source tool that flags AI-assisted interviews in real time. Works with Zoom, Meets, Teams, and more.

The future of online interviews is here.

245

Why pre-training and post-training teams need to get along

David Mizrahi18.7. klo 06.21

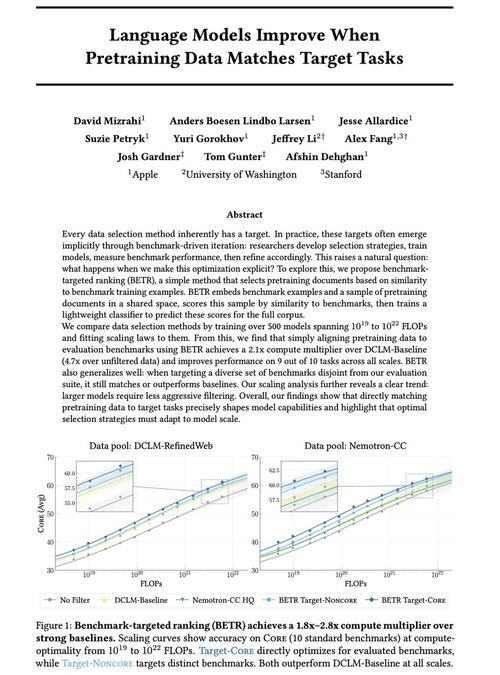

Excited to share our new work: “Language Models Improve When Pretraining Data Matches Target Tasks”

Yes, it sounds obvious (and it is!), but typically this only happens implicitly and indirectly: intuitively select data → benchmark → refine → repeat.

We wondered: what happens if we explicitly match pretraining data to benchmarks? The result is a dead simple approach that yields 2x+ compute multipliers over strong baselines and gives us a principled way to study how benchmark choices shape (and constrain!) model capabilities.

Bonus: extensive scaling laws from training 500+ models that reveal how optimal data selection evolves as models scale.

🧵 (1/14)

2,69K

Grok team is internalizing human data ops (e.g. recruiting for AI tutor role for Japanese). Likely more frontier labs think about owning and operating the data labor.

Koki Ikeda | SoftBank15.7. klo 22.12

Grokを開発する「xAI」は日本語のAI Tutorを募集してる。

業務内容は、日本語の文章・音声・動画データのラベル付け・アノテーションなど。フルリモートで日本から働けて、報酬はアメリカ水準の高時給。

🗣️ 日本語ネイティブ

🧑💻 フルリモート

💰 時給35–65ドル(5200-9600円)

🕐 6ヶ月契約(延長あり)

8,38K

Grok team is internalizing human data ops (e.g. recruiting for AI tutor role for Japanese). Given the Scale AI transition, likely more frontier labs think about owning and operating the data labor.

Koki Ikeda | SoftBank15.7. klo 22.12

Grokを開発する「xAI」は日本語のAI Tutorを募集してる。

業務内容は、日本語の文章・音声・動画データのラベル付け・アノテーションなど。フルリモートで日本から働けて、報酬はアメリカ水準の高時給。

🗣️ 日本語ネイティブ

🧑💻 フルリモート

💰 時給35–65ドル(5200-9600円)

🕐 6ヶ月契約(延長あり)

336

If you are at ICML and interested in RL or multilinguality, please say hi to @marafinkels! We worked closely the past few months to ship an RL method to fix a critical Gemini quality issue. She has great research ideas as well! Hope Gemini x academia stay in touch.

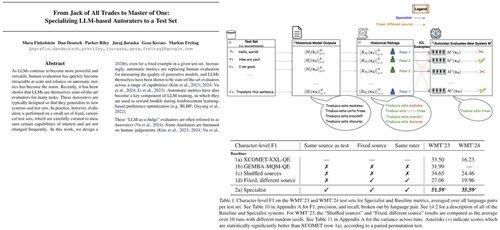

Mara Finkelstein27.11.2024

LLMs are typically evaluated w/ automatic metrics on standard test sets, but metrics + test sets are developed independently. This raises a crucial question: Can we design automatic metrics specifically to excel on the test sets we prioritize? Answer: Yes!

5,77K

Shane Gu kirjasi uudelleen

New blog post about asymmetry of verification and "verifier's law":

Asymmetry of verification–the idea that some tasks are much easier to verify than to solve–is becoming an important idea as we have RL that finally works generally.

Great examples of asymmetry of verification are things like sudoku puzzles, writing the code for a website like instagram, and BrowseComp problems (takes ~100 websites to find the answer, but easy to verify once you have the answer).

Other tasks have near-symmetry of verification, like summing two 900-digit numbers or some data processing scripts. Yet other tasks are much easier to propose feasible solutions for than to verify them (e.g., fact-checking a long essay or stating a new diet like "only eat bison").

An important thing to understand about asymmetry of verification is that you can improve the asymmetry by doing some work beforehand. For example, if you have the answer key to a math problem or if you have test cases for a Leetcode problem. This greatly increases the set of problems with desirable verification asymmetry.

"Verifier's law" states that the ease of training AI to solve a task is proportional to how verifiable the task is. All tasks that are possible to solve and easy to verify will be solved by AI. The ability to train AI to solve a task is proportional to whether the task has the following properties:

1. Objective truth: everyone agrees what good solutions are

2. Fast to verify: any given solution can be verified in a few seconds

3. Scalable to verify: many solutions can be verified simultaneously

4. Low noise: verification is as tightly correlated to the solution quality as possible

5. Continuous reward: it’s easy to rank the goodness of many solutions for a single problem

One obvious instantiation of verifier's law is the fact that most benchmarks proposed in AI are easy to verify and so far have been solved. Notice that virtually all popular benchmarks in the past ten years fit criteria #1-4; benchmarks that don’t meet criteria #1-4 would struggle to become popular.

Why is verifiability so important? The amount of learning in AI that occurs is maximized when the above criteria are satisfied; you can take a lot of gradient steps where each step has a lot of signal. Speed of iteration is critical—it’s the reason that progress in the digital world has been so much faster than progress in the physical world.

AlphaEvolve from Google is one of the greatest examples of leveraging asymmetry of verification. It focuses on setups that fit all the above criteria, and has led to a number of advancements in mathematics and other fields. Different from what we've been doing in AI for the last two decades, it's a new paradigm in that all problems are optimized in a setting where the train set is equivalent to the test set.

Asymmetry of verification is everywhere and it's exciting to consider a world of jagged intelligence where anything we can measure will be solved.

298,76K

Impactful work anyone can do is to use LLMs to journal and digitize as much of your workflow, CoTs, and inspiration.

Context engineering for automating and augmenting yourself in life and work.

Thariq15.7. klo 05.51

Journals & To Dos

I have a few custom commands:

/journal command that will create a new journal entry for the day.

/todos a command that will let me create new to dos or mark others as done. To dos are organized by topic in files, e.g. ‘

Claude will often search my code, projects, etc. for more context when I add a to do, which is super helpful.

835

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin