Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

I am extremely excited about the potential of chain-of-thought faithfulness & interpretability. It has significantly influenced the design of our reasoning models, starting with o1-preview.

As AI systems spend more compute working e.g. on long term research problems, it is critical that we have some way of monitoring their internal process. The wonderful property of hidden CoTs is that while they start off grounded in language we can interpret, the scalable optimization procedure is not adversarial to the observer's ability to verify the model's intent - unlike e.g. direct supervision with a reward model.

The tension here is that if the CoTs were not hidden by default, and we view the process as part of the AI's output, there is a lot of incentive (and in some cases, necessity) to put supervision on it. I believe we can work towards the best of both worlds here - train our models to be great at explaining their internal reasoning, but at the same time still retain the ability to occasionally verify it.

CoT faithfulness is part of a broader research direction, which is training for interpretability: setting objectives in a way that trains at least part of the system to remain honest & monitorable with scale. We are continuing to increase our investment in this research at OpenAI.

16.7. klo 00.09

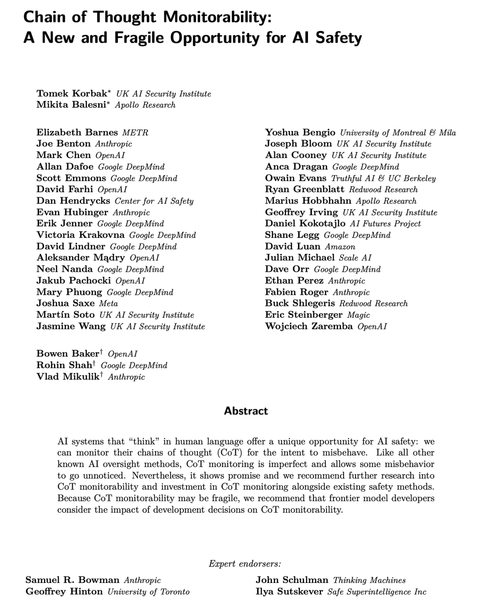

Modern reasoning models think in plain English.

Monitoring their thoughts could be a powerful, yet fragile, tool for overseeing future AI systems.

I and researchers across many organizations think we should work to evaluate, preserve, and even improve CoT monitorability.

264,18K

Johtavat

Rankkaus

Suosikit