Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Talent and Capex are two of the most important trends to watch for in AI

1/ Who a company has to build AI models

2/ How much money a company has to train next generation models

Had a free day to play with 03 Pro so Sharing a few visuals and tables on these topics 🧵

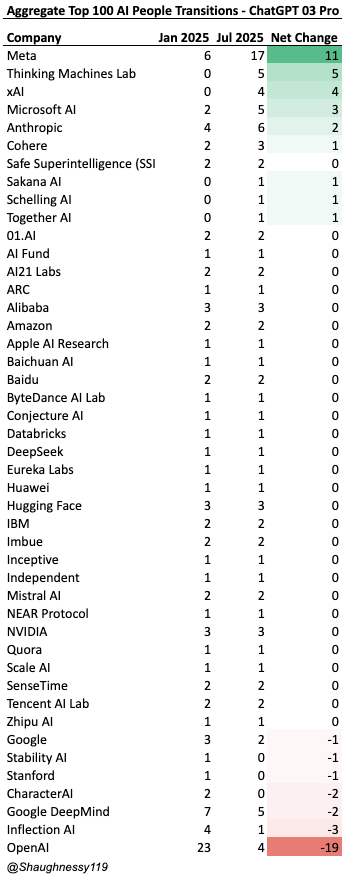

Talent Flows Since Start of Year

Of the top 100 people in AI, @meta attracted the most (+11), followed by @thinkymachines (+5), @xai (+4), @Microsoft (+3) and @AnthropicAI (+2)

@OpenAI had the largest outflow of talent (-19)

Microsoft acquired inflection talent, Google for characterAI and OpenAI to Meta mostly and SSI/TM

Zuckberg is convincing.

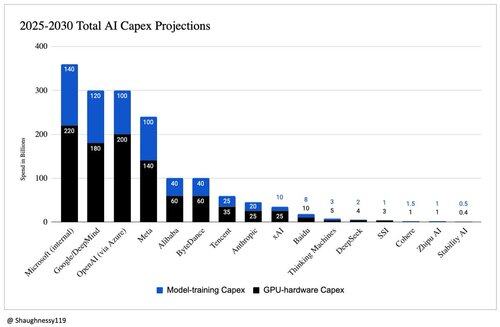

Projected Capex to 2030

Most think we achieve AGI by 2030, so seemed fitting.

Massive power law distribution with Microsoft, Google, OpenAI and Meta spending the most (76% of total spend!)

This chart will prove to be very wrong as companies IPO, grow recurring revenue, raise capital and spend it on training runs. Really hard to break out capex too, so I'd assume this is wrong but directionally correct.

I spoke to OpenAI o3 Pro All day, so what are its thoughts on our conversation?

- Spend is still buying leadership but every incremental performance point now costs exponentially more so we are in a clear diminishing returns regime

- GPU supply has become the ultimate gate. Whoever locks Nvidia Blackwell or MI300 capacity through 2027 keeps a structural edge

- Elite research talent is scattering into smaller venture labs and Chinese challengers which dilutes the historic DeepMind / OpenAI monopoly and raises execution risk for incumbents

- Balance‑sheet depth decides stamina: Meta Google Microsoft Amazon can self‑fund multi‑billion clusters while OpenAI Anthropic xAI must keep tapping external cash at ever higher stakes

- Algorithmic efficiency breakthroughs such as DeepSeek’s large MoE and Meta’s open‑source Llama road‑map could flip the race by cutting cost per score point by an order of magnitude

Inference Time Spend

I probably used up 3-4 hours of o3 Pro Deep Research Thinking Time. Do with that what you will.

This is all far from super correct given transparency issues, capex breakdown issues and the like.

You can learn a LOT with 03 Pro all day. Off to dinner!

If you're an early stage AI founder raising capital, please get in touch I'd love to talk!

Submit your document or deck to @VenturesRobot my AI and I'll see it or DM!

3,27K

Johtavat

Rankkaus

Suosikit