Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

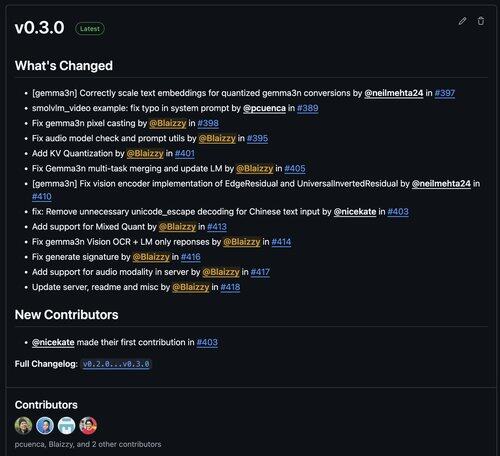

MLX-VLM v0.3.0 is here!

And it brings a lot of significant improvements 🔥📷

What’s new:

- KV Cache quantization

- Mixed quantization (i.e, 4bit + 6bit)

- Add support for audio modality in server

- Gemma3n: Fixed embeddings, Vision, pixel casting, multi-task (audio + vision) and OCR

- Fixed chinese decoding

- Refactored generate and convert package

Thank you very much and shout out to the amazing mlx-vlm contributors Neil from LMStudio, @pcuenq and Nicekate

> pip install -U mlx-vlm

Please leave us a start ⭐️

16,31K

Johtavat

Rankkaus

Suosikit