Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

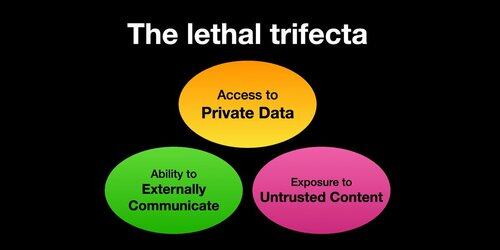

If you use "AI agents" (LLMs that call tools) you need to be aware of the Lethal Trifecta

Any time you combine access to private data with exposure to untrusted content and the ability to externally communicate an attacker can trick the system into stealing your data!

Here's my full explanation of why this combination is so dangerous - if you are using MCP you need to pay particularly close attention because it's very easy to combine different MCP tools in a way that exposes yourself to this risk

And yes, this is effectively me trying to get the world to care about prompt injection by trying out a new term for a subset of the problem!

I hope this captures the risk to end users in a more visceral way - particularly important now that people are mixing and matching MCP

@IanChen524 That depends very much on much on which definition of "AI agent" you are using

If you are using the popular "an LLM running tools in a loop" definition then any LLM that calls at least one MCP counts as an "agent"

Which definition do you use?

@IanChen524 Importantly, you can use only entirely trusted, well constructed MCP servers and still run into private data exfiltration attacks if you combine the wrong set of MCPs that, together, have the three characteristics

@WolframRvnwlf Lots of people have been building that and I don't trust it, for similar reasons to why I don't trust antivirus software: it defends against known threat but fails to protect against new vulnerabilities, so it can always be subverted by a suitably motivated attacker

@WolframRvnwlf I don't want antivirus, I want my software to be secure enough that viruses can't do anything bad to my systems!

... and @Atlassian are the latest company to be added to my collection of examples of the lethal trifecta in action: their newly released MCP server has been demonstrated to allow prompt injection attacks in public issues to steal private data

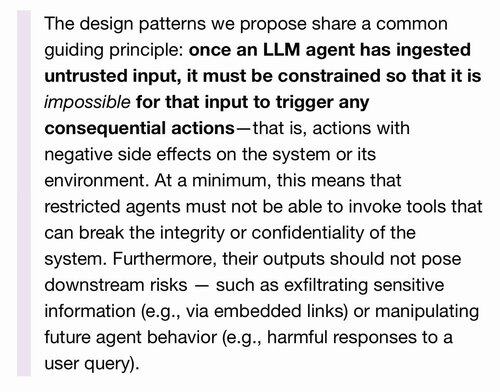

@convexDad I'm a firm believer in this principle, from this paper

576,6K

Johtavat

Rankkaus

Suosikit