Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

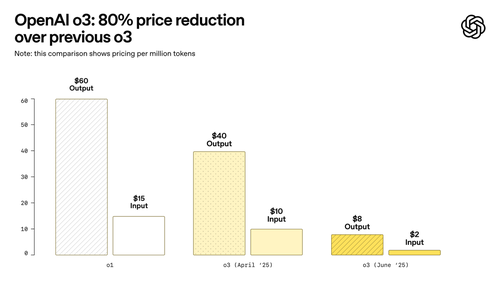

We’re cutting the price of o3 by 80% and introducing o3-pro in the API, which uses even more compute.

o3:

Input: $2 / 1M tokens

Output: $8 / 1M tokens

Now in effect.

We optimized our inference stack that serves o3. Same exact model—just cheaper.

o3-pro:

Input: $20 / 1M tokens

Output: $80 / 1M tokens

(87% cheaper than o1-pro!)

We recommend using background mode with o3-pro: long-running tasks will be kicked off asynchronously, preventing timeouts.

359,88K

Johtavat

Rankkaus

Suosikit