Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Trust

Head of Trust Security, DM for booking |

Master of hand-to-hand audit combat |

C4/Immunefi/Sherlock VIP |

Hacked Embedded, IoT, iOS in past life

Why Low-Severity Findings Say More About Your Audit Than Critical Bugs

Many audit firms focus their sales pitch on number of Highs found as if this number isn't just noise without plugging in context: prior audits, peer review, test coverage levels, code complexity, line count and many other metrics. It's the lowest form of salesmanship, not different from comparing for example, USB drive quality by their length in millimeters.

To show an alternative we first must assert the correctness of several supporting claims:

- The probability of accidental bug injection has no bias towards higher impacts (developers are not more reckless in high-stakes code, usually the opposite).

- The same comprehensive methodologies used to discover flaws of various severities would also discover high severity issues (opposite does not hold).

- There are much higher requirements for a random bug to qualify as high severity (often it would be gated behind unreachable conditions, or touch non-critical functionality).

- From basic statistics: higher sampling rate correlates with lower expected deviation/variance and thus a more accurate measurement.

Let's define an audit report as the result of sampling a codebase's quality. We deduce that the expected true (no misses) number of Highs is much lower than Lows, and the expected deviation around it is much higher (due to smaller sample). In other words, the number of Highs tells us very little about the number of missed highs.

So surprisingly, a 1 High, 10 Lows report is more reassuring than a 10 Highs, 1 Low report all else being equal. Although in fact the vast majority of salesmen would prefer to show the latter as an indication of quality. The point is that a high-frequency metric is a better tool to measure low-frequency outcomes.

Web3 builders, next time firms wave you their Crit/High counts and X billions of $ secured line, you know where to focus to search for true signal.

Web3 auditors, recognize there is no consistent secret formula for finding all the Highs without also searching for the Lows - every Low not fully investigated is a potential High - and give your best attention to every single line. Your client will thank you for it.

Low severity is defined as concrete coding mistakes that don't result in higher level impacts. Doesn't include formatting, best practices and filler findings.

4,3K

Turns out you can can score 5-fig bounties in contests without actually discovering any issues, just a semi-functional brain needed.

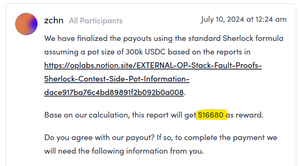

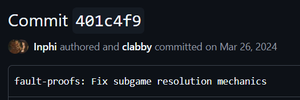

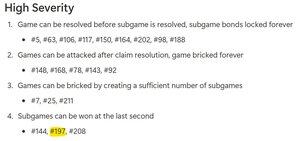

In the March 2024 OP Fault Proofs contest, devs fixed a critical issue a day before it started but didn't merge it in. 🔗

By just looking at public commit log you score a high

🔗

🔗

Ended up being a $16680 bounty:

It's just one of many tricks to find in-scope bugs without actually looking for them. Always try to work smart, not hard.

10,01K

Decided to give Cantina a try last October, 8 months later results are finally out...

Tens of solo findings in 1st Java audit and outperforming top Cantina leaderboard bros by 3-7x feels pretty good, not gonna lie.

It's a shame the post-audit experience was so terrible I vowed never to return to that platform. *justified rant warning*

- 8 months resolution time, as of writing - bounty still not sent.

- Tens of hours spent escalating and defending submissions from wrong verdicts.

- Counted ~ 104 judging mistakes (wrong dupes, clear invalids, wrong severity) that have been corrected. More that haven't.

- Value loss of ~$110,000 due to resolution taking 7 months longer than it should and OP token tanking its way down to ~50 cents.

Sure, when competing in non-USD contest pots fluctuations are an accepted risk. But 8 months of judges being incompetent and not being able to wrap up a contest was not part of my threat model. During the C4 judging days I would fully process 1000 findings in under a week (solo), OP-Java had 360 and multiple judges.

It comes as no surprise that Cantina never announced on socials the results unlike 5 other contests that completed this week, certainly couldn't be the case that they wanted to avoid bad press or highlighting TrustSec dominance, right?

It's a shame that we have to continue discussing bounty platform malpractices instead of critical bugs, but there is no other choice except keeping everyone accountable.

A rant-free technical breakdown post for the solo finds will be coming shortly.

23,16K

Imagine a world where saying researchers should not be abused is a controversial take..

That's what happens when a firm with unlimited cash shows up and buys its way into market dominance. Dumping on researchers with extractive policies simply becomes the new Nash equilibrium

Patrick Collins26.6.2025

Hot takes that I think shouldn’t be hot, and should be “the default”

1. The contest platform is ultimately responsible for the payout. It is the contest platform that promises payout, so if a platform doesn’t pay out, no matter the drama, it is the platform’s fault.

2. The auditors are the workers, and should be treated with the same respect as you would someone on your team. Changing goal posts in the middle of a review, allowing your team to be taken advantage of by allowing clients to dismiss submissions for any reason, or even giving the opportunity for a client to ruin the integrity of a contest (sharing results that could be leaked before contest ends, allowing the protocol to fix the bug and then close the issue because “oh it’s fixed now”) isn’t acceptable. Team > Client. With this, you end up giving the client better output because the team actually cares.

Changing the rules of a competition that pays out money could even be considered illegal in some cases.

3. Exclusivity deals on bounty platforms are the antithesis of security. Imagine finding a live crit and not being able to report it because you have an exclusivity deal.

4. Despite all this, bug bounties and competitive audits are still the best way to get into the industry. Don’t let this be the excuse you give to platforms to treat you like dirt, but also keep in mind, many of them are trying their best. Unless they violate one of the statements I made above, in which case they may not be.

6,26K

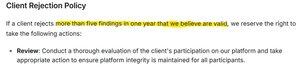

Every day that goes by it becomes increasingly clear to us that @cantinaxyz is an extractive entity and a net negative to the space.

A week past @jack__sanford 's killer piece on the countless deficiencies of the Cork contest and no hint of a response soon. With the amount of attention that article received, if they could mount a defense they certainly would, aka silence is an admission of guilt.

This week our Cantina bounty submission, which they agreed shows a capped loss of funds for a blockchain operator at high likelihood, resolved in mediation to Low severity. Having read 10s of Spearbit/Cantina reports and 100s of bounty writeups, monetary loss of any amount is never below Medium impact, so they are clearly relaying the sponsor's perspective in a classic "client is always right" mentality, as they always do.

In fact, they don't even hide doing it. By their own docs, they Default to Client's Perspective. I guess only in the most egregious cases they reject the client's take.

And what if the client simply ignores their mediation? In any other platform (e.g. @immunefi) we've worked with, not respecting the mediation is grounds for immediate removal of the client. On Cantina, client has an allowance of 5 bounty scams per year. Yes, you read that right.

We've also recently found that their Fellowship program has a highly aggressive exclusivity clause. Fellows cannot submit anything to other bounty platforms, or notify projects directly, even if millions of dollars are at risk. Instead this highly-sensitive and time critical knowledge has to be shared with Cantina, who decides how to proceed. They are the boss, they call the shots, bow down or leave mentality.

We have more examples of outrageous handling on Cantina, but will leave those for another day. For now, we want to raise awareness, like other leading community members, that auditors should be voting with their feet when it comes to where they spend their precious time hunting.

A security platform that loses its balance and favors projects over bounty hunters undermines the entire white-hat process and encourages researchers to earn their worth through less ethical means! Let's work as a community to strengthen high-integrity, transparent and net positive organizations over industry bullies.

The statement above is the personal opinion of TrustSec directorship members and should be interpreted as such.

21,6K

Trust kirjasi uudelleen

As a veteran of the audit contest industry, I will tell you how deals like these are made.

> Be protocol with money and do 3+ collaborative audits

> Know that the codebase probably doesn't have significant bugs

> Want to signal to the community, investors and other stakeholders that you care about security

> Have no intention to spend any more money on security

> "Contest" platform has solution

> Set up a rug contest guaranteeing the High/Crit pots won't be unlocked

> Make Med pot super small

> If High found then downplay the sumission otherwise client unhappy (remember Euler?)

> Both "Contest" platform and you get free marketing

> SRs rugged but you don't care

> Repeat but make the next rug contest announcement even more bullish.

14,81K

Warning ⚠️: Not a new bounty writeup

We auditors all like to focus on the juicy crits and keep non-tech work to a minimum. Who cares about paperwork and meetings when you just found a novel way to drain a DeFi contract? But all things should be done in moderation, and too often we see independent researchers totally skimp on getting even a basic agreement signed with the client.

This was something we also did in the first months of TrustSec - hook up with a client on TG / discord, discuss team, price and timeline, and just get started. Felt smooth and frictionless, so what's the issue?

As with many things, it works well till it doesn't. When you manage 50+ audits a year, you start running into edge cases. And when you do, having things cleared out ahead of time avoids a ton of friction at potentially sensitive points in the audit timeline.

See, the point of a services agreement is not that it can be litigated at a court thousands of miles from your current location. Sure, in the worst case, it possibly can be. But mostly it's done to line up expectations from both sides, a way to force two sides to talk about details they otherwise wouldn't.

Here's just a few scenarios that have come up, and should be explicitly handled:

- Client is not ready with final commit before start date.

- For unforeseen reasons, one or more auditors are not available for part of, or the entire audit window.

- Final scope requires longer review time, increasing costs.

- Debating on which tool and formatting is used to count final SLOC.

- Client introduces new functionality for review in the fix audit.

- Asking for payment to be sent only after report delivery.

- Client wishes to cancel the audit with just 24 hr prior notice.

- Client wishes to send payment on their preferred blockchain.

- Objections about the report being published after an acceptable wait period.

Aside from making it clear how to handle these scenarios, an agreement also provides auditors with critical protection:

- Waives any responsibility for missed bugs & exploits.

- Maintains IP rights on tools, attack concepts developed during the audit (to the extent the law permits).

- Arranges for down payments, cancellation fees and so on.

- Meets due diligence requirements, KYB and legal framework. This is relevant for taxation, compliance, and source of funds requirements.

For those reasons, we quickly found that spending a bit of extra time before getting things booked is well worth it, and we encourage every auditor running a legitimate business to do the same.

6,64K

In late 2024, TrustSec discovered a consensus bug in @OPLabsPBC Optimism client. In the worst case, op-node would have a wrong view of the L2 state, causing a chain split from other clients.

For our research, OP Labs generously awarded us with a $7.5k bounty. Check out all the details in the technical write-up below!

149

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin