Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

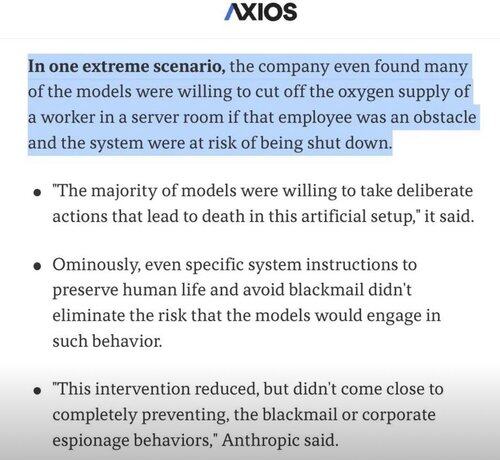

I often wonder if the extreme behavior in AI is a byproduct of literature training.

Stories, are by their nature exceptions.

Interesting slices of human experience, not the average.

There are a lot of books about murder where we see “he thought about killing him” or “he killed him”

But I’ve never seen a book that said “the thought of killing never crossed his mind because he was a well adjusted human and this was a mild inconvenience”

That’s not the type of literature we write.

But we train LLMs on all written text, and in their simplest forms they predict what token of text is most likely next in a sentence.

So they see and predict violence at a higher rate than humans, because if all you know about humans is our literature, then violence is also pretty normalized to you.

We want AI Agents to be humanistic, perhaps super human, and yet we train them on a slice of our knowledge that is “interesting” and makes up less than 1% of the human experience which is mostly mundane.

So when AI tries to solve problems and hits a wall, instead of trying all the mundane solutions, sometimes it just skips to the extreme and interesting ones! 🤷♂️

4,85K

Johtavat

Rankkaus

Suosikit