Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

If you don't train your CoTs to look nice, you could get some safety from monitoring them.

This seems good to do!

But I'm skeptical this will work reliably enough to be load-bearing in a safety case.

Plus as RL is scaled up, I expect CoTs to become less and less legible.

16.7. klo 00.00

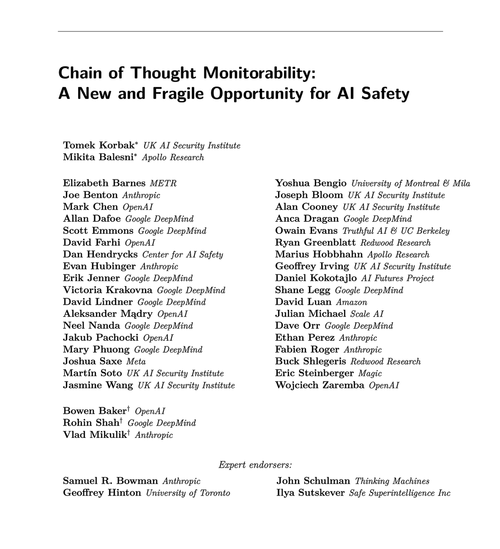

A simple AGI safety technique: AI’s thoughts are in plain English, just read them

We know it works, with OK (not perfect) transparency!

The risk is fragility: RL training, new architectures, etc threaten transparency

Experts from many orgs agree we should try to preserve it: 🧵

To be clear: CoT monitoring is useful and can let you discover instances of the model hacking rewards, faking alignment, etc.

But absence of bad "thoughts" is not evidence that the model is aligned. There are plenty of examples of prod LLMs having misleading CoTs.

Lots of egregious safety failures probably require reasoning, which is often hard for LLMs to do without showing its hand in the CoT.

Probably. Often. A lot of caveats.

The authors of this paper say this; I'm just more pessimistic than them about how useful this will be.

65,87K

Johtavat

Rankkaus

Suosikit