Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Seth Ginns

Managing Partner @coinfund_io. Former Equities Investor at Jennison Associates; IBD at Credit Suisse

Personal opinions. Not investment advice

Convergence accelerating

@HyperliquidX DAT announced this am led by Atlas Merchant Capital (Bob Diamond Fmr CEO Barclays) with D1 Capital participation (Dan Sundheim Fmr CIO Viking). Fmr Boston Fed President Eric Rosengren expected to join board

Seth Ginns13.7. klo 19.19

Yes, treasury companies should trade at a premium to NAV

What?

🧵

10,43K

Seth Ginns kirjasi uudelleen

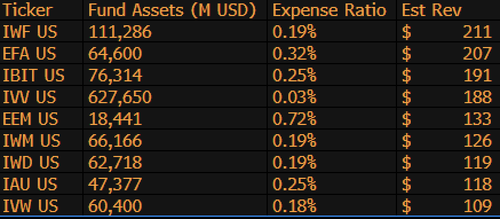

$IBIT is now the 3rd highest revenue-generating ETF for BlackRock out of 1,197 funds, and is only $9b away from being #1. Just another insane stat for a 1.5yr old (literally an infant) ETF. Here's Top 10 list for BLK (aside, how about the forgettable $IWF at top spot, who knew?)

107,99K

Vote on Senate stablecoin bill today at 4:30pm ET

Senate Cloakroom17.6.2025

At 4:30pm, the Senate is expected to proceed to two roll call votes on the following:

1. (If cloture is invoked) Confirmation of Executive Calendar #98 Olivia Trusty to be a Member of the Federal Communications Commission for the remainder of the term expiring June 30, 2025.

2. Passage of Cal. #66, S.1582, GENIUS Act, as amended.

781

Seth Ginns kirjasi uudelleen

Onchain yields remain out of reach for most people. Despite being permissionless, their complexity discourages many from participating.

Through our close partnership with @Veda_labs, these complexities on Plasma will be abstracted away to bring sustainable yield to the masses.

33,72K

Seth Ginns kirjasi uudelleen

Thoughts/predictions in decentralized AI training, 2025.

1. One thing to say is we are definitely in a different world with decentralized AI training than we were 18 months ago. Back then, decentralized training was impossible and now it's in-market and is a field.

2. Make no mistake, the end goal of d-training is to train competitive, frontier models on d-networks. As such, we are just at the start of our competitive journey, but we're moving fast.

3. It's now consensus that we can pre-train and post-train multi-billion parameter models (mostly LLMs, mostly transformer architectures) on d-networks. The current state of the art is up to ~100B, the top end of which is in sight but has not been shown.

4. It's now consensus we can train <10B parameter models on d-networks pretty feasibly. There have also been specific case studies (primarily from @gensynai @PrimeIntellect @NousResearch) where 10B, 32B, 40B parameters have been or are being trained. @gensynai's post-training swarm operates on up to 72B parameter models.

5. The @PluralisHQ innovation has now invalidated the "impossibility" of scalable pre-training on d-networks by removing the communication inefficiency bottleneck. However, raw FLOPs, reliability, and verifiability remain bottlenecks for these types of networks -- problems that are very solvable but will take some time to technically resolve. With Protocol Learning from Pluralis as it stands, I think we get to ~100B models on 6-12 month timeframe.

6. How do we get from 100B to 300B parameter models? I think we need to find ways to effectively and fluidly shard parameters and to keep individual device memory relatively low (e.g. <32GB memory per device). I think we need to get to 20 EFlops in a network; that means something like 10-20K consumer devices running for 4-6 weeks on a training.

Overall, d-training is poised to be a very exciting space. Some of its innovations are already being considered for broad AI applications.

4,15K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin